AASL Column, May 2024

Barret Havens and Barbara Opar, column editors

Column by Maya Gervits, PhD, Director of the Littman Architecture Library New Jersey Institute of Technology

Enhancing Assessment of the Impact of Scholarly Work in Design Disciplines

“Research metrics can provide crucial information that would be difficult to gather or understand by means of individual expertise. But this quantitative information must not be allowed to morph from an instrument into the goal.”

Leiden Manifesto

Over the past decade, several articles have surfaced discussing the evolution of tools created to evaluate the impact of scholarly output in humanities and design disciplines. These discussions often spotlight well-established databases like Web of Science and Scopus and software like Publish or Perish. They also mention such alternative metric tools as Altmetric, PlumX, Zotero, and Mendeley. Knowing the limitations and advantages of each tool is essential as their number is constantly growing.

A report from July 2015 titled “The Metric Tide,” issued by the Independent Review of the Role of Metrics in Research Assessment and Management, underscores persistent limitations of available resources and methods of evaluation. It highlights that coverage remains sparse in many humanities fields, and that the reliance on citation-based metrics may unfairly disadvantage disciplines not well served by mainstream citation tools. These tools exhibit disciplinary biases and inconsistencies, offering non-uniform coverage. Specifically, they tend to favor science and technology, leaving disciplines like arts, architecture, and humanities at a disadvantage due to their reliance on diverse scholarly outputs beyond journal articles. “The Leiden Manifesto for Research Metrics” acknowledges that “a European group of historians received a relatively low rating in a national peer-review assessment because they wrote books rather than articles in journals indexed by the Web of Science.” Although book reviews usually are not cited, it has been suggested that publications in this genre should also be considered in evaluations of research impact, alongside the quality of the journals in which those reviews are published. In addition, it should be taken into account when the quality of the reviews themselves cannot be automatically assessed.

Despite recent attempts to index books, traditional citation tools primarily focus on journal articles, inadequately capturing the impact of books and other non-article research outputs. Impact factors and similar journal-based metrics provide only partial insights, especially in disciplines where monograph publication is a prevalent format for scholarly output.

Moreover, citation analysis must acknowledge divergent citation patterns across disciplines. Works in arts, architecture, and humanities typically require more time to accumulate citations than those in STEM fields, and many publications remain uncited.

The Leiden Manifesto for Research Metrics suggests that “reading and judging a researcher’s work is much more appropriate than relying on one number,” as citations vary often and may not reflect valuable non-academic research uses and thus may not be good indicators of scholarly impact. Also, as new forms of scholarly communication are emerging, “altmetrics,” or metrics often based on social media for assessing the effect of diverse scholarly objects, are increasingly used to complement traditional metrics. They help to give a more holistic understanding of the impact of research outputs. According to M. Erdt, one of the contributors to the book Altmetrics for Research Outputs Measurement and Scholarly Information Management, published by Springer in 2018, “altmetrics” offer new insights and “novel approaches to measure and track the impact of scholarly outputs to a wider, non-academic audience,” which is critical for architecture, art, and design. Scholars increasingly turn to platforms like Google Scholar and Google Books and academic and social networking sites such as ResearchGate and Academia.edu to address existing gaps.

Additionally, traditional databases like JSTOR, Wiley Online Library, Academic Search Complete, and various EBSCOhost databases, as well as IEEE and ScienceDirect, offer “cited by” functions, thus enhancing metrics for multidisciplinary research endeavors.

The integration of Clarivate into the Web of Science has expanded its coverage to include book citations, although limitations persist, particularly in architecture publishing.

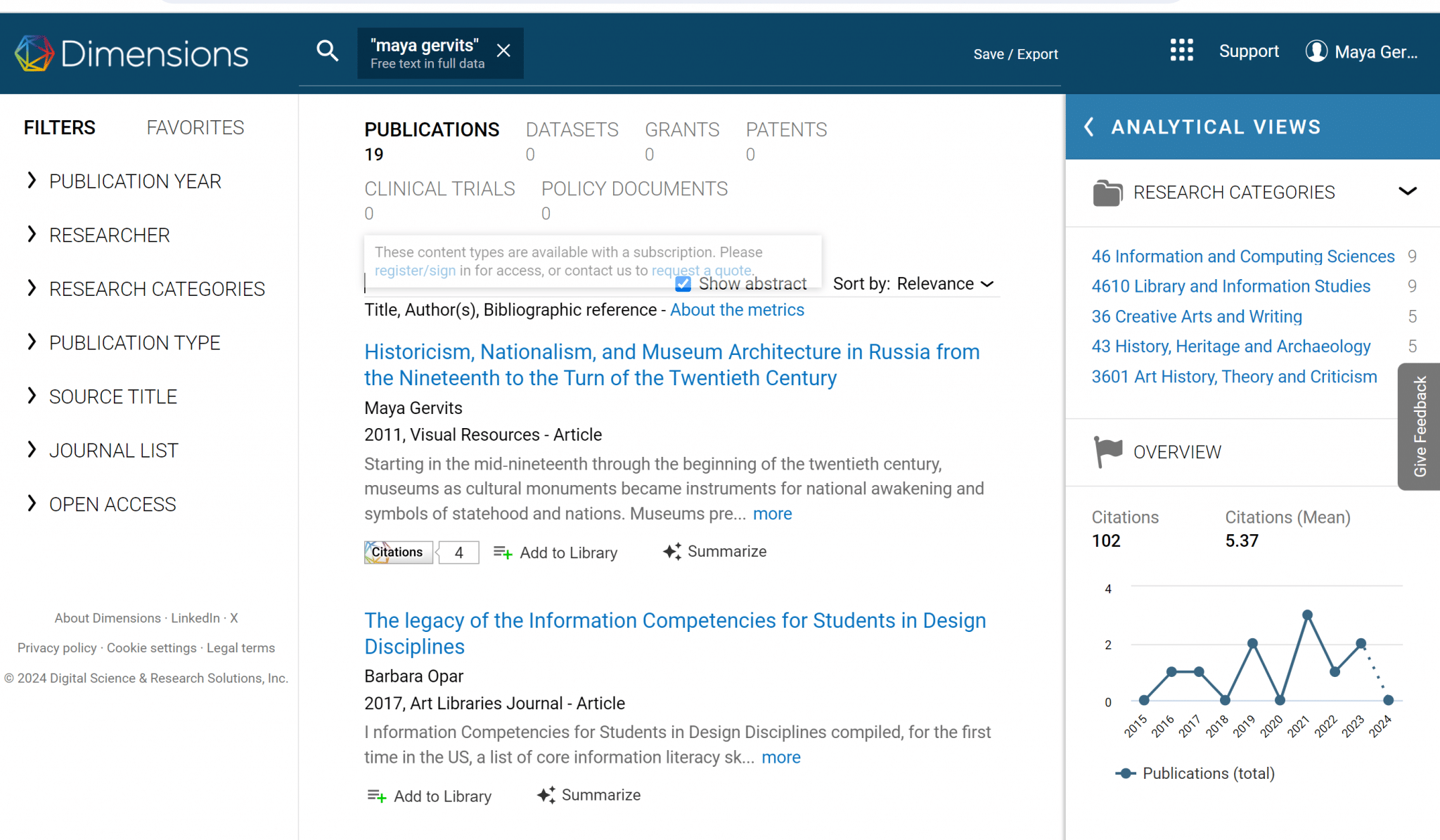

Dimensions is a relatively new but promising collection of linked research data, including open-access publications. It incorporates both citations and alternative metrics, covers resources in different formats, including books, articles, and patents, and offers a variety of filtering options. However, it still needs to be more comprehensive.

SpringerLink (Formerly Bookmetrix) allows for the tracing of citations, but only in Springer publications.

Several sources of book reviews, such as Choice: Current Reviews for Academic Libraries, Book Review Plus, Google Books, or Amazon book reviews, can help locate otherwise difficult-to-find citations in monographs and collections of essays.

Specialized tools like CumInCAD (Cumulative Index of Computer-Aided Architectural Design), which indexes over 12,300 records from journals and conferences worldwide, with 9,600 papers available as full-text PDFs, and CiteSeerX cater to specific domains, enabling focused retrieval of citations.

ERIH PLUS (originally called the European Reference Index for the Humanities or ERIH) contains bibliographic information on academic journals in the humanities and social sciences (SSH), including articles on architectural history and urban planning. However, it indexes European publications only.

ACM Digital Library allows for revealing the downloads, which can be a good indicator of impact through the tracking of citations.

Recognizing the diverse nature of scholarly output in design disciplines, the article “New Criteria for New Media,” published in the journal “Leonardo” in 2009 stated that the sources of citations should be broadened to include a variety of digital resources available via the web. Currently efforts are underway to incorporate digital sources like social media, YouTube videos, Slide Share, Vimeo, and other channels to reveal the number of views generated by professional presentations. However, because of the lack of standardization, altmetrics should be used along with traditional metrics and experts peer-reviews to capture a broader picture of impact.

The OpenSyllabus Explorer, a recent addition, facilitates the discovery of frequently cited texts in academic syllabi.

TinEye helps reveal the impact of images on the web, adding metrics relevant to the output of artists and designers.

While all the above tools are flawed, their collective use offers a more comprehensive understanding of scholarly impact across design disciplines, emphasizing the need for a multifaceted approach to evaluation. Tools like InCites within Web of Science allow for institutional productivity analysis. Conference paper acceptance rates and publishers’ prestige can be used along with Libcitations, introduced in 2009, which gauges a book’s impact by tracking its acquisition across libraries.

It is critical to use metrics responsibly and take into consideration both quantitative and qualitative indicators that come from different data points. Metric Tide Report indicates that “quality needs to be seen as a multidimensional concept that cannot be captured by any one indicator…” and “…quantitative indicators such as citation-based data, cannot sufficiently provide nuanced or robust measures of quality when used in isolation.”

Selected Bibliography:

Beyond Bibliometrics: Harnessing Multidimensional Indicators of Scholarly Impact. 2014. Cambridge Massachusetts: MIT Press.

Association for Information Science and Technology. 2015. Scholarly Metrics Under the Microscope: From Citation Analysis to Academic Auditing. Medford New Jersey: Published on behalf of the Association for Information Science and Technology by Information Today.

Measuring Scholarly Impact: Methods and Practice. 2014. Cham Switzerland: Springer.

Meaningful Metrics: A 21st Century Librarian’s Guide to Bibliometrics Altmetrics and Research Impact. 2015. Chicago: Association of College and Research Libraries A division of the American Library Association.

Holmberg, K. Altmetrics for information professionals: past, present, and future. 2016. Chandos Publishing.

Gervits, Maya and Rose Orcutt. 2016. “Citation Analysis and Tenure Metrics in Art Architecture and Design-Related Disciplines.” Art Documentation: Journal of the Art Libraries Society of North America 218–29.

Donthu, Naveen, Satish Kumar, Debmalya Mukherjee, Nitesh Pandey and Weng Marc Lim. n.d. “How to Conduct a Bibliometric Analysis: An Overview and Guidelines.” Journal of Business Research 285–96. https://doi.org/10.1016/j.jbusres.2021.04.070.

Study Architecture

Study Architecture  ProPEL

ProPEL